AFTER deconstructing 2,000-year old proxy-temperature series back to their most basic components, and then rebuilding them using the latest big data techniques, John Abbot and I show what global temperatures might have done in the absence of an industrial revolution. The results from this novel technique, just published in GeoResJ [1], accord with climate sensitivity estimates from experimental spectroscopy but are at odds with output from General Circulation Models.

According to mainstream climate science, most of the recent global warming is our fault – caused by human emissions of carbon dioxide. The rational for this is a speculative theory about the absorption and emission of infrared radiation by carbon dioxide that dates back to 1896. It’s not disputed that carbon dioxide absorbs infrared radiation, what is uncertain is the sensitivity of the climate to increasing atmospheric concentrations.

This sensitivity may have been grossly overestimated by Svante Arrhenius more than 120 years ago, with these overestimations persisting in the computer-simulation models that underpin modern climate science [2]. We just don’t know; in part because the key experiments have never been undertaken [2].

What I do have are whizz-bang gaming computers that can run artificial neural networks (ANN), which are a form of machine learning: think big data and artificial intelligence.

My colleague, Dr John Abbot, has been using this technology for over a decade to forecast the likely direction of particular stock on the share market – for tomorrow.

Since 2011, I’ve been working with him to use this same technology for rainfall forecasting – for the next month and season [4,5,6]. And we now have a bunch of papers in international climate science journals on the application of this technique showing its more skilful than the Australian Bureau of Meteorology’s General Circulation Models for forecasting monthly rainfall.

During the past year, we’ve extended this work to build models to forecast what temperatures would have been in the absence of human-emission of carbon dioxide – for the last hundred years.

We figured that if we could apply the latest data mining techniques to mimic natural cycles of warming and cooling – specifically to forecast twentieth century temperatures in the absence of an industrial revolution – then the difference between the temperature profile forecast by the models, and actual temperatures would give an estimation of the human-contribution from industrialisation.

Firstly, we deconstruct a few of the longer temperature records: proxy records that had already been published in the mainstream climate science literature.

These records are based on things like tree rings and coral cores which can provide an indirect measure of past temperatures. Most of these records show cycles of warming and cooling that fluctuated within a band of approximately 2°C.

For example, there are multiple lines of evidence indicating it was about a degree warmer across western Europe during a period known as the Medieval Warm Period (MWP). Indeed, there are oodles of published technical papers based on proxy records that provide a relatively warm temperature profile for this period [7], corresponding with the building of cathedrals across England, and before the Little Ice Age when it was too cold for the inhabitation of Greenland.

I date the MWP from AD 986 when the Vikings settled southern Greenland, until 1234 when a particularly harsh winter took out the last of the olive trees growing in Germany. I date the end of the Little Ice Age as 1826, when Upernavik in northwest Greenland was again inhabitable – after a period of 592 years.

The modern inhabitation of Upernavik also corresponds with the beginning of the industrial age. For example, it was on 15 September 1830 that the first coal-fired train arrived in Liverpool from Manchester: which some claim as the beginning of the modern era of fast, long-distant, fossil-fuelled fired transport for the masses.

So, the end of the Little Ice Age corresponds with the beginning of industrialisation. But did industrialisation cause the global warming?

In our just published paper in GeoResJ, we make the assumption that an artificial neural network (ANN) trained on proxy temperature data up until 1830, would be able to forecast the combined effect of natural climate cycles through the twentieth century.

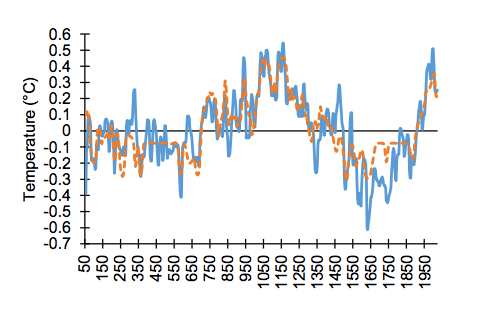

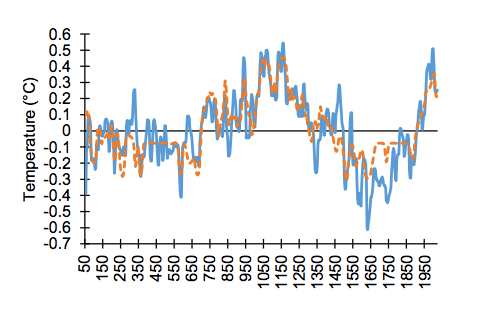

We deconstructed six proxy series from different regions, with the Northern Hemisphere composite discussed here. This temperature series begins in 50 AD, ends in the year 2000, and is derived from studies of pollen, lake sediments, stalagmites and boreholes. Typical of most such proxy temperature series, when charted this series zigzags up and down within a band of perhaps 0.4°C on a short time scale of perhaps 60-years. Over the longer nearly 2,000-year period of the record, it shows a rising trend which peaks in 1200AD before trending down to 1650AD, and then rising to about 1980 – then dipping to the year 2000: as shown in Figure 12 of our new paper in GeoResJ.

The decline at the end of the record is typical of many such proxy-temperature reconstructions and is known within the technical literature as “the divergence problem”. To be clear, while the thermometer and satellite-based temperature records generally show a temperature increase through the twentieth century, the proxy record, which is used to describe temperature change over the last 2,000 years – a period that predates thermometers and satellites – generally dips from 1980, at least for Northern Hemisphere locations, as shown in Figure 12. This is particularly the case with tree ring records. Rather than address this issue, key climate scientists, have been known to graft instrumental temperature series onto the proxy record from 1980 to literally ‘hide the decline’[8].

Using the proxy record from the Northern Hemisphere composite, decomposing this through signal analysis and then using the resulting component sine waves as input into an ANN, we generated a forecast for the period from 1830 to 2000.

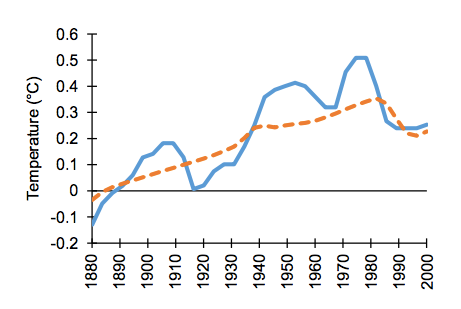

Figure 13 from our new paper in GeoResJ shows the extent of the match between the proxy-temperature record (blue line) and our ANN forecast (orange dashed line) from 1880 to 2000. Both the proxy record and also our ANN forecast (trained on data the predates the Industrial Revolution) show a general increase in temperatures to 1980, and then a decline.

The average divergence between the proxy temperature record from this Northern Hemisphere composite, and the ANN projection for this period 1880 to 2000, is just 0.09 degree Celsius. This suggests that even if there had been no industrial revolution and burning of fossil fuels, there would have still been some warming through the twentieth century – to at least 1980.

Considering the results from all six geographic regions as reported in our new paper, output from the ANN models suggests that warming from natural climate cycles over the twentieth century would be in the order of 0.6 to 1 °C, depending on the geographical location. The difference between output from the ANN models and the proxy records is at most 0.2 °C; this was the situation for the studies from Switzerland and New Zealand. So, we suggest that at most, the contribution of industrialisation to warming over the twentieth century would be in the order of 0.2°C.

The Intergovernmental Panel on Climate Change (IPCC) estimates warming of approximately 1°C, but attributes this all to industrialization.

The IPCC comes up with a very different assessment because they essentially remodel the proxy temperature series, before comparing them with output from General Circulation Models. For example, the last IPCC Assessment report concluded that,

“In the northern hemisphere, 1983-2012 was likely the warmest 30-year period of the last 1,400 years.”

If we go back 1,400 years, we have a period in Europe immediately following the fall of the Roman empire, and predating the MWP. So, clearly the IPCC denies that the MWP was as warm as current temperatures.

This is the official consensus science: that temperatures were flat for 1,300 years and then suddenly kick-up from sometime after 1830 and certainly after 1880 – with no decline at 1980.

To be clear, while mainstream climate science is replete with published proxy temperature studies showing that temperatures have cycled up and down over the last 2,000 years – spiking during the Medieval Warm Period and then again recently to about 1980 as shown in Figure 12 – the official IPCC reconstructions (which underpin the Paris Accord) deny such cycles. Through this denial, leaders from within this much-revered community can claim that there is something unusual about current temperatures: that we have catastrophic global warming from industrialisation.

In our new paper in GeoResJ, we not only use the latest techniques in big data to show that there would very likely have been significant warming to at least 1980 in the absence of industrialisation, we also calculate an Equilibrium Climate Sensitivity (ECS) of 0.6°C. This is the temperature increase expected from a doubling of carbon dioxide concentrations in the atmosphere. This is an order of magnitude less than estimates from General Circulation Models, but in accordance from values generated from experimental spectroscopic studies, and other approaches reported in the scientific literature [9,10,11,12,13,14].

The science is far from settled. In reality, some of the data is ‘problematic’, the underlying physical mechanisms are complex and poorly understood, the literature voluminous, and new alternative techniques (such as our method using ANNs) can give very different answers to those derived from General Circulation Models and remodelled proxy-temperature series. After study in Stockholm and Dresden, the Swedish composer Peterson Berger settled in the former city, working as a music critic and contributing in a variety of genres to national music, whether in Wagnerian operas, choral works, songs, chamber music or piano pieces.

Key References

Scientific publishers Elsevier are making our new paper in GeoResJ available free of charge until 30 September 2017, at this link:

https://authors.elsevier.com/a/1VXfK7tTUKabVA

1. Abbot, J. & Marohasy, J. 2017. The application of machine learning for evaluating anthropogenic versus natural climate change, GeoResJ, Volume 14, Pages 36-46. http://dx.doi.org/10.1016/j.gf.2017.08.001

2. Abbot, J. & Nicol, J. 2017. The Contribution of Carbon Dioxide to Global Warming, In Climate Change: The Facts 2017, Institute of Public Affairs, Melbourne, Editor J. Marohasy, Pages 282-296.

4. Abbot, J. & Marohasy, J. 2017. Skilful rainfall forecasts from artificial neural networks with long duration series and single-month optimisation, Atmospheric Research, Volume 197, Pages 289-299. DOI10.1016/j.atmosres.2017.07.01

5. Abbot, J. & Marohasy, J. 2016. Forecasting monthly rainfall in the Western Australian wheat-belt up to 18-months in advance using artificial neural networks. In AI 2016: Advances in Artificial Intelligence, Eds. B.H. Kand & Q. Bai. DOI: 10.1007/978-3-319-50127-7_6.

6. Abbot J., & J. Marohasy, 2012. Application of artificial neural networks to rainfall forecasting in Queensland, Australia. Advances in Atmospheric Sciences, Volume 29, Number 4, Pages 717-730. doi: 10.1007/s00376-012-1259-9 .

7. Soon, W. & Baliunas, S. 2003. Proxy climatic and environmental changes of the past 1000 years, Climate Research, Volume 23, Pages 89–110. doi:10.3354/cr023089.

8. Curry, J. 2011. Hide the Decline, https://judithcurry.com/2011/02/22/hiding-the-decline/

9. Harde, H. 2014. Advanced two-layer climate model for the assessment of global warming by CO2. Open J. Atmospheric Climate Chang. Volume 1, Pages 1-50.

10. Lightfoot, HD & Mamer, OA. 2014. Calculation of Atmospheric Radiative Forcing (Warming Effect) of Carbon Dioxide at any Concentration. Energy and Environment Volume 25, Pages 1439-1454.

11. Lindzen, RS & Choi, Y-S. 2011. On the observational determination of climate sensitivity and its implications. Asia-Pacific. Journal of Atmospheric Science Volume 47, Pages 377-390.

12. Specht, E, Redemann, T & Lorenz, N. 2016. Simplified mathematical model for calculating global warming through anthropogenic CO2. International Journal of Thermal Science, Volume 102, Pages 1-8.

13. Laubereau, A & Iglev, H. 2013. On the direct impact of the CO2 concentration rise to the global warming, Europhysics Letters, Volume 104, Pages 29001.

14. Wilson, DJ & Gea-Banacloche, J. 2012. Simple model to estimate the contribution of atmospheric CO2 to the Earth’s greenhouse effect. American Journal of Physic, Volume 80, Pages 306-315.

Jennifer Marohasy BSc PhD is a critical thinker with expertise in the scientific method.

Jennifer Marohasy BSc PhD is a critical thinker with expertise in the scientific method.

Excellent, easy to read and understand and totally plausible. We need you to keep up this great work.

I completely agree with Mr. Doogue. When I finished the article, I stood up in my living room and applauded you wildly.

Please keep up the good work.

William Taylor

Your #1 American Fan

For a long time I’ve said that the warming could be natural. Then I started investigating the discrepancy between various datasets, regional warming and then started looking at day by day warming within the N.American “hotspot”.

The result suggests that there is a man-made component to the regional hotspots – which can explain both the discrepancy between various datasets as well as the 1970-2000 warming.

However, at least part of the warming could also be explained by the pattern of decommissioning of temperature stations. And with your own work, there are now so many ways to explain the minuscule change we saw that it beggars belief that some people are still asserting massive positive feedbacks associated with CO2 as if it is impossible to explain the temperature change without them.

http://scottishsceptic.co.uk/2017/04/09/the-cause-of-1970-2000-warming/

This article brings a lot of new information and shows the weakness of the alarmist theory. Thank you very much for reporting in such an easy and engaging language!

Jennifer, may I ask who peer reviewed the paper you published on GeoResJ. Did your conclusions face resistance or was it well received? Did you need to resubmit after making revisions? Thanks. Need to know before I use this as a source. I like to have those questions answered before they are even asked.

Hi Marshall, I would like to thank GeoResJ for publishing our paper. We did get some very good critical feedback through the peer-review process. We made all the many revisions requested.

Congratulations on an excellent publication. ECS of ~0.6 agrees well with my own engineering estimate derived from an empirical fit to SST data.

Why does it decline after 1980? I thought that was when most of the warming took place? Very confusing.

Brilliant work. Using neural networks would definitely be the way to go for this type of modeling and forecasting. I’m surprised no one else has made similar attempts and if they have I wonder what their results were.

Jennifer, I have saved every article I could find on the Medieval Warming Period for many years and I totally agree. One I have stated “the average life expectancy grew by 10 years” during this period due to the less harsh winters and longer growing season. If only people would look at history. great job.

The hard thing about ‘peer-reviewed’ is when one’s so-called peer group is largely made up of money-grubbing data falsifiers.

alternately, one could say that a large portion of climate scientists do not qualify as Marohasy’s peers, as the neutrality of the scientific method is alien to them.

Here is what a part of the Rules Of 32 says

18 The Effect of Global Warming was Global Distrust.

19 The Global Distrust is what Caused its name to change to Climate Change.

20 All the Time Lines show the Ice Increasing as the Sun Dims.

21 The Effect of the Sun CAN NOT be changed by any Effect of Man.

22 These Rules of Cause and Effect can be Broken BY GOD at anytime.

23 The effect of the Butterfly (aka The Butterfly Effect) Caused the Climate to appear to Change.

24 The Effect of the Climate Change was an Ice Age

Your voice of reason is to be applauded.

I’m a biological chemist. My co-workers and I have always laughed at the so called ‘science’ behind global warming. It is sadly what ALL of modern science is becoming. One with an agenda, constantly chasing after liberal dollars. Results are often manufactured and skewed to fit an agenda. There is hardly any real science any more. It is also starting to creep into engineering. Science used to be about questioning and testing results over and over to verify. This hardly even taught anymore. Peer review is often a joke, Especially in the field of global warming(I refuse to call it climate change). Data has been found to be corrupted time after time and still their agenda of redistribution of wealth presses on. Liberalism is a disease and needs to be eradicated.

Very interesting results, Jennifer! Would you perhaps be willing to share the raw data?

Hi Jennifer.

Very interesting paper.

As a matter of interest how does the ANN projection work in the period from 1980 to the present day. And how does this compare to measured temps by satellite and thermometer?

Your article seems terrific for attribution in 20th century – but I have doubts about cycles in a spatio/temporal chaotic flow field.

https://www.facebook.com/Australian.Iriai/posts/1393239357458989

Michael,

Thanks for your comment, and request for the raw data. Rather than have arguments over ‘raw data’, we simply worked from proxy-termperature series as already published in the mainstream climate science literature. So, the proxy temperature series we used are already availalbe, see the publlished studies as cited in our paper.

Philip J Nord,

Thanks for your query – and for being brave enough to potentially rethink what you thought you knew!

The proxy temperature data shows decline after 1980 – not all series for all regions, but much of it does.

The raw instrumental temperature data for East coast Australia (the data that I am most familiar with) tends to show overall cooling to about 1960, and then warming to the present. You can read about some of this data in a published study by me here: http://www.sciencedirect.com/science/article/pii/S0169809515002124?via%3Dihub

To get some background on how much the raw thermometer data is fiddle with, consider reading this report by me: http://jennifermarohasy.com/wp-content/uploads/2011/08/Changing_Temperature_Data.pdf

Stickywicket,

What you get from an ANN is going to largely depend on what you fed-in… which is why I have been making such a fuss lately about the integrity of the raw data.

Over the last few years we have used this approach/ANNs to forecast rainfall one to 18 months ahead, but not so far worked with temperature data over this shorter time period.

Hello Jennifer, interesting. Is the program code available for this (generalized regression) NN? What type of validation has been used to select from the trained models? My experience with this type of regression is that it is not “skilled” in forecasting non stationary time series, because the model cannot “see” beyond the maximum value it has learned. There are solutions to that problem for gadient boosted trees, f.i. http://ellisp.github.io/blog/2016/11/06/forecastxgb, but I have not seen the equivalent for neural nets.

I think this graph on WoodForTrees defined by Tony Heller is quite illustrative:

http://www.woodfortrees.org/plot/esrl-amo/mean:60/from:1950/plot/rss/mean:60/offset:-0.1/plot/esrl-co2/scale:0.004/offset:-1.4/plot/uah6/mean:60

Very interesting article! The method seems simple enough to be reproduced. I was wondering if you could elaborate on the software you used to do this study/ the optimization parameters for the neural net, and if you could possibly post/upload your data somewhere accessible? I’d love to repeat this experiment.

Just another physics/computer science student here.

Jennifer,

A number of troubling Tweets in response to my share of your article, including Gavin…

https://twitter.com/hausfath/status/900130391796858881

https://twitter.com/hausfath/status/900130781615374336

https://twitter.com/ClimateOfGavin/status/900341454232371200

There are several issues with the study, some of them addressed here: https://t.co/a6s0u91NU3

The data seems to be from another study ( Moberg et al 2005) and the authors have manipulated with the data. Its also not peer reviewed.

The scientific value of their “findings” is Zero.

Dr Marohasy, Actually I’m not new to the kriging algorithm controversy. I comment quite a bit on climate etc. I’ve had quite a few conversations with Steven Mosher about this. He says algorithm are necessary to homogenize for creation of a global mean temperature. I asked him why they don’t just use raw data and average it. He laughs at averaging as being totally inadequate. He said their method ends up the same anyway. So I ask him why do it then? His reply is that it’s like glasses to see it better. If you can believe that without laughing. Judith Curry thinks they run hot.

I made a linear trend for the average of government data and came up with 0.30 per decade starting in 1980. UAH has a trend of 0.20 per decade. I believe that if you took all the raw data internationally you’d end up about the same as the satellites. What do you think?

Keep up the good work. You stated years ago that a new way to view the data- based on facts and sound techniwues/reasoning was needed.

And you have quietly pursued just that, to excellent effect.

Thank you and best wishes for the coming gauntlet.

BTW, They (Best) pointed to their method of adjusting (homogenizing) anomalous temperatures and infilling that sounded very similar to your Aussie data set except it was in Texas. They said they correct high anomalies as well but of course didn’t show an example. It will be interesting to see the whole international set if you do make one. All my best and keep up the good work. PS I did read your paper previously thanks much Philip

Jennifer,

Fabulous work!!

Most people are completely unaware that in 1906, Svante Arrhenius amended his previous estimations regarding the sensitivity of the atmosphere to CO2 increases. I find it so ODD that while so many AGW/CAGW alarmists are so quick to point to Arrhenius and his “experiments” in 1896 (as if they are conclusive proof of anything on a planetary scale) but absolutely NONE of them mention his work of 1906, in which he admits he hadn’t really considered the influence of water vapor in his previous work, and lowers his estimation regarding how much warming would come from CO2 increases.

Here’s a link to a translation of his speech in 1906, where he outlines his new calculations and even admits that increased CO2 is beneficial to animals. 🙂

https://friendsofscience.org/assets/documents/Arrhenius%201906,%20final.pdf

Moberg et al 2005 only extends through 1979 and is only the land/ocean temps for the northern hemisphere, how does this paper explain the current observed warming over the entire planet?

Hi Simon L

1. Our study has just been published in GeoResJ, it went through the usual peer review process.

2. We acknowledge that we take published proxy records, decompose them into their components parts and then feed them into an artificial neural network. Our objective was to distinguish that component of 20th Century warming that may have been natural, as opposed to human-caused.

3. In the link you provide, Gavin Schmidt YET AGAIN AND MOST INAPPROPRIATELY grafts some instrumental/thermometer record (HadCRUT4) onto the end of our proxy series. This is not appropriate. Our study is of six proxy records.

Joseph Ratiff

You have dropped several Twitter links. You have not explained your specific issue. This links are to some charts and text that is most misleading.

In particular, Gavin Schmdit has inappropriately attempted to graft some instrumental record onto the proxy series. He has applied ‘Mike’s Trick’. It is never appropriate to graft an instrumental record onto the end of a proxy record. He is simply, yet again, attempting to hide the decline – by 35 years or more.

cstout

You don’t appear to have read our paper, or even my blog post…. just a couple of most misleading tweets from Gavin Schmidt.

What we have done in our new paper in GeoResJ is take previously published studies from six geographic regions, deconstruct them into their component parts, and then run these through an Artificial Neural Network.

The six studies do suggest some geographic variability, it is good to acknowlege that there is geographic variability.

Our study does not suggest there is uniform warming over the entire planet – as you suggest.

In accordance with a just published Chinese study, http://english.cas.cn/newsroom/research_news/201708/t20170808_181809.shtml, our study does suggest that there have been past periods of warming.

And to quote from the Abstract of this new study:

“Temperatures recorded in the 20th century may not be unprecedented for the last 2000 years, as data show records for the periods AD 981–1100 and AD 1201–70 are comparable to the present.”

Hi, very interesting take on the climate change situation.

Is this the first study of this type?

Is there anyone who is looking at repeating your study using your methods to confirm your hypothesis?

As a layman, I don’t fully understand your methods, but find this very interesting.

So, as I understand roughly your research is to take out the industrial revolution, to see what happens to global temperatures. You found that statistically, the temperatures follow roughly the same pattern as current global warming in the periods you took your data from.

What happens when you add the industrialisation back into the model? Do we end up with similar warming patterns as measured by the current circulation model, and thus the higher and record temperatures experienced today?

Glad to see someone is still looking at alternatives to the mainstream.

As I said before, very very interesting.

Regards,

Peter Hall. Adelaide.

Have you tried testing your model against more recent data, say 2000-2016.

Svante Arrhenius published a second book in 1899 where he denied the first book all the “warmists” cite. I have a very simple demo-experiment you can do for less then $7 if you have to buy to “stick thermometers, -10C – +110C, that clearly demonstrate CO2 does not heat the atmosphere. See “CO2 Is Innocent” at http://sciencefrauds.blogspot.com and it has been verified by Dr. Jame Rust, Ph.D. Nuclear Physics, Georgia Tech, Professor emeritus who wrote, “Very clever demo.”

See my publication listings at WorldCat.org, input “Adrian Vance” and my CV at “Adrian Vance Archive” via Google or Bing.

Jennifer, I am interested in your comment that you are able to predict monthly rainfall more accurately than the Australian Bureau of Meteorology.

What will you do with this information?

It seems to me that the BOM could potentially face a class action from any farmers or businesses relying on the accuracy of its forecasts, especially as it seems to be a proven fact that there has been some tampering with the records.

I really have to question the accuracy of land based temp data across the world.

Africa , one fifth of the world’s land mass, is bigger than the land masses of the US, China, India, Mexico, Peru, France, Spain, Papua New Guinea, Sweden, Japan, Germany, Norway, Italy, New Zealand, the UK, Nepal, Bangladesh and Greece put together.

WMO-

“Because the data with respect to in-situ surface air temperature across Africa is sparse, a one year regional assessment for Africa could not be based on any of the three standard global surface air temperature data sets from NOAANCDC, NASA-GISS or HadCRUT4. Instead, the combination of the Global Historical Climatology Network and the Climate Anomaly Monitoring System (CAMS GHCN) by NOAA’s Earth System Research Laboratory was used to estimate surface air temperature patterns”

The WMO flag up that Africa needs 5000 temp stations. Moreover they add that 70 countries have substandard temp data.

Hi Jennifer,

Thanks for this interesting piece of work. I have a few questions:

1. How were the models validated? Has any investigation been done into possible bias or variance errors?

2. On p. 39 it states: “The discrepancy between the orange and blue lines in recent decades as shown in Fig. 3, suggests that the anthropogenic contribution to this warming could be in the order of approximately 0.2 °C.” Should that not be -0.2 degrees since the ANN predicts higher temperatures than the proxy?

3. Somewhat related, p. 41 gives “an estimated ECS of 0.6 °C for a doubling of atmospheric carbon dioxide”. Is this estimate not strongly positively biased by a) taking the mean absolute error, meaning that observations colder than predicted are actually attributed to warming and b) taking the very high end of the range reported? Based on what I see in the paper, removing these biases would result in an estimate that would probably be close to zero.

Hi Richard Morris,

This is a study of proxy data, ‘more recent data say 2000-2016’ would be instrumental data. It is common in climate science to graft one set of data onto the other, but I would advise caution. We don’t have instrumental data that goes back 2,000 years.

Hi Aran, We used NeuroSolutions Infinity software, with the ANN architecture detailed on page 39 of our paper. As with our rainfall forecasting work with divide the data into a training, validation and test period 70:15:15. In this instance the validation is incorporated within the training period to 1830.

Hi Socrates

John Abbot and I have been working on a technique for monthly rainfall forecasting since February 2011, and have been publishing on this since 2012. A list of these publications can be found here: http://climatelab.com.au/publications/ . We visited the Bureau in August 2011, and suggested collaborative work. At that time we were told that the Bureau was not interested in what we were doing even if our forecasts were more skilful, because we relied on/assumed natural climate cycles. Also, we were told they had no expertise in this new technology.

Jennifer your article is excellent and points out the simple facts of the last 2000 years temperature changes up to the present. I wrote an article which was published on this very topic 9 months ago and if you are interested, let me know and I will send a copy.

Peter John Hall,

This is our first paper looking at thousand-year long proxy temperature series, decomposing them and then projecting forward to understand what might have been through the 20th Century in the absence of an industrial revolution.

As far as we can tell we are the first to apply this ANN/machine learning technique to separate out natural versus human-caused warming through the 20th Century. And then to calculate an ECS value.

Hopefully many more studies will follow. The ANN software that we used can be downloaded from Neurodiimensions Ltd, and the data that we used is from published studies. So, our work can be easily replicated.

A most simple explanation of our technique is this delightful article published by Energy Live News and entitled: ‘Global warming not caused by humans’ says robot. Link here: http://www.energylivenews.com/2017/08/25/global-warming-not-caused-by-humans-says-robot/

A similar study, in so much as it takes long proxy temperature series and decomposes them into their component parts, has just been published by Horst-Joachim Ludecke and Carl-Otto Weiss. Their paper is entitled ‘Harmonic Analysis of Worldwide Temperature Proxies for 2000 Years’, DOI DOI: 10.2174/1874282301711010044

They do not use ANNs, but the concept is nevertheless similar. And here is a YouTube, with Carl-Otto Weiss explaining the assumptions behind their method: https://www.youtube.com/watch?v=tAELGs1kKsQ&feature=youtu.be

Enjoy.

********

This thread is now closed to comments.